One of the most confusing parts of photo editing for me is finding simple definitions for some of the concepts inherent in photo editing software. Things like layers, blend modes and opacity, which are concepts many people ask about. But also the difference between pixel layers, raster layers, adjustment layers, and fill layers.

What are filters and why are they separate from adjustments? And what the heck is rasterizing anyway? Or the difference between “rasters” and “vectors”? And what is “rendering”? And of course, the single most important concept – non-destructive vs. destructive editing.

What are filters and why are they separate from adjustments? And what the heck is rasterizing anyway? Or the difference between “rasters” and “vectors”? And what is “rendering”? And of course, the single most important concept – non-destructive vs. destructive editing.

Despite shooting for many years, I stumble my way through explaining these concepts. So I finally went looking for the real answers. It wasn’t as easy as I thought it would be. But I persevered. Here’s what I found.

Most of us who have worked with photo-editing software, any software, for any length of time are able to move through edits with some level of confidence. But I don’t think many of us understand exactly what is happening when we add a layer or apply a filter. That came home recently when I watched a YouTube video where a very well-known instructor said “I’ve given up trying to explain how this works. I just know I like how it looks.” Frankly, I had the same experience in school a few years ago, when first learning photo editing. I was told a sequence of steps, but not why I needed all the steps and what each did. That bugs me to this day.

Most of us who have worked with photo-editing software, any software, for any length of time are able to move through edits with some level of confidence. But I don’t think many of us understand exactly what is happening when we add a layer or apply a filter. That came home recently when I watched a YouTube video where a very well-known instructor said “I’ve given up trying to explain how this works. I just know I like how it looks.” Frankly, I had the same experience in school a few years ago, when first learning photo editing. I was told a sequence of steps, but not why I needed all the steps and what each did. That bugs me to this day.

In defense, some photographers work exclusively by the way things look. They figure out what a tool does by trying it and once they’ve internalized that, that’s what they use it for all the time. Being able to explain it to someone else isn’t part of the equation. I respect that, but also think they are missing out on being able to fully leverage the software they’ve spent money on. Different combinations of tools in different situations may yield amazing results.

I am a geek and nerd. I need to know why things work the way they do. Here’s my first discovery: many of the concepts I’m trying to explain are the result of legacy decisions in software development. For example, layers made an appearance in Adoble Photoshop in version 3.0 in 1994, which was a full 6 years after the original product was developed. They were introduced to provide flexibility in terms of editing isolated areas of an image without affecting others or allowing for different versions of edited images. Until then, users had to save each edit as an individual file if they wanted to retain several versions of an edited image.

I am a geek and nerd. I need to know why things work the way they do. Here’s my first discovery: many of the concepts I’m trying to explain are the result of legacy decisions in software development. For example, layers made an appearance in Adoble Photoshop in version 3.0 in 1994, which was a full 6 years after the original product was developed. They were introduced to provide flexibility in terms of editing isolated areas of an image without affecting others or allowing for different versions of edited images. Until then, users had to save each edit as an individual file if they wanted to retain several versions of an edited image.

Even when legacy functionality is no longer needed because of advances in hardware, or automation of previously manually intensive steps, some developers tend to retain almost all legacy functionality when they release an “upgrade”. Why? Certainly Adobe believes that this strategy helps them retain a legacy user base while adding new users. So many concepts I need to explain may only be there because of this strategy.

Companies competing with Adobe try in many cases to mimic this legacy functionality, believing it will attract some of Adobe’s user base. But some others, such as Serif’s Affinity Photo team, design from the ground up. So maybe it won’t be so important in future to understand these specific concepts.

Companies competing with Adobe try in many cases to mimic this legacy functionality, believing it will attract some of Adobe’s user base. But some others, such as Serif’s Affinity Photo team, design from the ground up. So maybe it won’t be so important in future to understand these specific concepts.

Until then, let’s get back to those definitions.

![]() A pixel layer is the image data that was captured from your camera sensor, and it is either interpreted in camera and delivered as a .jpg file to your editing software, or if shooting in RAW, is brought into the editing software as data and interpreted and delivered there. By the way, RAW processing was not available in Photoshop until 2003. A pixel layer is made up of, you guessed it, pixels. Each pixel has a colour value and a brightness or tone value. The final product delivered to the editing software is often labelled as a “background” image. Depending on your software, it may or may not be a locked layer to prevent accidental alterations to the original image.

A pixel layer is the image data that was captured from your camera sensor, and it is either interpreted in camera and delivered as a .jpg file to your editing software, or if shooting in RAW, is brought into the editing software as data and interpreted and delivered there. By the way, RAW processing was not available in Photoshop until 2003. A pixel layer is made up of, you guessed it, pixels. Each pixel has a colour value and a brightness or tone value. The final product delivered to the editing software is often labelled as a “background” image. Depending on your software, it may or may not be a locked layer to prevent accidental alterations to the original image.

An adjustment layer, when applied over a pixel layer, results in an “adjusted” image. You see the changes on the screen. But this layer itself does not directly adjust the image, but rather contains a series of instructions recorded by the software that defines how the image should be adjusted. The software reads the adjustments selected and presents a preview of the image to you when the adjustment layer is applied. But if you were to exit the software and look at the image outside of it, you would see only the original image.

A fill layer can do one of two things: it can apply any solid colour to the image, which can then be manipulated in any of several ways to restrict it to a specific area of the image or to blend the colour in a specific way with the underlying image. But when using black or white as the fill colour, a fill layer can also work in conjunction with a mask to reveal or conceal areas of the photograph underneath.

A fill layer can do one of two things: it can apply any solid colour to the image, which can then be manipulated in any of several ways to restrict it to a specific area of the image or to blend the colour in a specific way with the underlying image. But when using black or white as the fill colour, a fill layer can also work in conjunction with a mask to reveal or conceal areas of the photograph underneath.

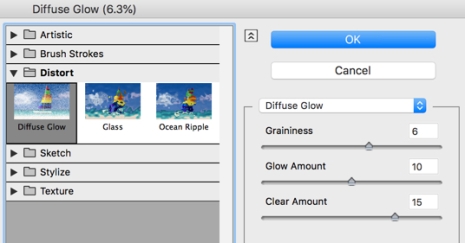

A filter was initially the only option for editing photos digitally. It was by definition destructive. It acted directly on a pixel layer and “filtered” out specified parameters such as a colour value to, for example, create a black and white image. Filters can also change the tonal values, and the position and relationship of pixels in the image, to introduce new effects such as blur or distortion. In the early days, this was a one way street. Once you applied a filter, you could not go back. You could delete it by deleting the hopefully separate layer to which it was applied. Today, Adobe Photoshop filters have evolved to be “smart” and non-destructive, working on “smart objects”, performing the same filtering tasks as before, but making them adjustable. This is a classic example of Adobe retaining a legacy function but making it more flexible. In Affinity Photo, filters are in principle no different than adjustment layers but are kept separate to appeal to that legacy user base.

A filter was initially the only option for editing photos digitally. It was by definition destructive. It acted directly on a pixel layer and “filtered” out specified parameters such as a colour value to, for example, create a black and white image. Filters can also change the tonal values, and the position and relationship of pixels in the image, to introduce new effects such as blur or distortion. In the early days, this was a one way street. Once you applied a filter, you could not go back. You could delete it by deleting the hopefully separate layer to which it was applied. Today, Adobe Photoshop filters have evolved to be “smart” and non-destructive, working on “smart objects”, performing the same filtering tasks as before, but making them adjustable. This is a classic example of Adobe retaining a legacy function but making it more flexible. In Affinity Photo, filters are in principle no different than adjustment layers but are kept separate to appeal to that legacy user base.

All of the plugins that can be added to an image editor are also filters. So whether you use Nik, Topaz or any other third party product, the result is changes to pixels. The good ones create their own copy of the pixel layer to work on.

Vector objects or vectors, in contrast to pixel objects, use mathematical equations to define the position of various elements of the object relative to each other. Rather than identifying, for example, that the blue cloud is 200 pixels away from the upper edge of the file folder and 500 pixels in depth, a vector object would record that the cloud is 90 degrees south of the curve in the top of the file folder and takes up 3/4 of the height of the file folder. The main benefit: vector objects can be resized to any size larger or smaller and never lose clarity/resolution. A pixel object, in contrast, would lose detail and resolution particularly each time it is made larger, because the 500 pixels of the cloud would be spread over a larger and larger physical space. That said, today’s “upscaling” algorithms are getting better and better, removing some of the concern about resizing pixel objects.

Vector objects or vectors, in contrast to pixel objects, use mathematical equations to define the position of various elements of the object relative to each other. Rather than identifying, for example, that the blue cloud is 200 pixels away from the upper edge of the file folder and 500 pixels in depth, a vector object would record that the cloud is 90 degrees south of the curve in the top of the file folder and takes up 3/4 of the height of the file folder. The main benefit: vector objects can be resized to any size larger or smaller and never lose clarity/resolution. A pixel object, in contrast, would lose detail and resolution particularly each time it is made larger, because the 500 pixels of the cloud would be spread over a larger and larger physical space. That said, today’s “upscaling” algorithms are getting better and better, removing some of the concern about resizing pixel objects.

To be perfectly clear, pixel objects come only from optical cameras and vector objects come only from computers. So, although a vector object is sometimes called an image, it really isn’t, at least not to me. In a world where graphic design has blended so completely with image processing, the difference may not be important. Vector objects are often used to enhance pixel objects – such as adding ethereal wings to a posed portrait of a human costumed with head bowed. I do occasionally add text to some of my work, and this too is a vector object. But generally speaking, I don’t use vectors.

I won’t go into blend modes or masking, since lots of information has been published on these concepts. Suffice it to say that all layers can be blended or masked.

I won’t go into blend modes or masking, since lots of information has been published on these concepts. Suffice it to say that all layers can be blended or masked.

As noted, all of the objects or layers above are sets of instructions. At some point though, those instructions have to actually be applied to a finished image.

Rasterizing an image means converting one or more layers containing vectors into pixel layers. They can then be edited as would any other pixel layer. Sometimes the result can be called a “raster image” if all layers are originally vectors and all have been converted.

Rasterizing an image means converting one or more layers containing vectors into pixel layers. They can then be edited as would any other pixel layer. Sometimes the result can be called a “raster image” if all layers are originally vectors and all have been converted.

Similarly, rendering is defined, at least in the computer field, as producing a viewable, photorealistic image from multiple graphics objects, including vectors. The image is synthesized from data that includes shape, position, viewpoint, texture, shading, lighting and colour information. It’s interesting though that pen and paper artists can also produce “renderings”, which are often stylized, simplified versions of an imagined or existing scene. But I digress…

Merging or flattening an image means applying all the instructions from adjustment, fill, filter or raster layers in an image file to the background pixel layer and creating a new pixel layer, visible in the layer stack. Its main benefit is to reduce overall image file size by removing the layers that were flattened. That means, of course, that any previous changes can no longer be adjusted.

Merging or flattening an image means applying all the instructions from adjustment, fill, filter or raster layers in an image file to the background pixel layer and creating a new pixel layer, visible in the layer stack. Its main benefit is to reduce overall image file size by removing the layers that were flattened. That means, of course, that any previous changes can no longer be adjusted.

Exporting an image means applying the instructions from all layers in an image file to the background pixel layer and then producing a separate image file that can be shared with others. You’ll see no change in the layer stack of the original image file, but you will now have a second file in a format that can stand on its own. Depending on your software, exporting can be accomplished through a detailed export dialogue, or by simply selecting “Save As” from the menu and selecting the format desired.

And there you have it. All the mysteries of life revealed. I wrote this post more for me than for you, but I hope it helps you make some sense of the way your photo editing software works and how you can best take advantage of it.